HashiCorp Terraform is an open-source infrastructure as code tool that simplifies the operational overhead associated with deploying and managing Google Cloud infrastructure. Terraform helps customers quickly and safely migrate to Google Cloud, manage operations, and scale cloud consumption.

Leaders from Google Cloud and HashiCorp Terraform recently came together for an Ask Me Anything event to share an overview of our partnership and solutions, and to answer community questions about all things Terraform on Google Cloud.

In this article, we summarize the key takeaways from the event, including the recording, a resource roundup, and written Q&A. Let’s dive in!

Event recording

Tip 👉 Use the timestamp links in the YouTube description to jump to the topics you care about most.

Terraform solutions overview

Terraform is an open-source infrastructure as code tool that helps you create, change, and improve infrastructure safely and efficiently. Terraform can manage infrastructure on virtually any platform or service that supports an API, including on-premises, hybrid, and multi-cloud environments.

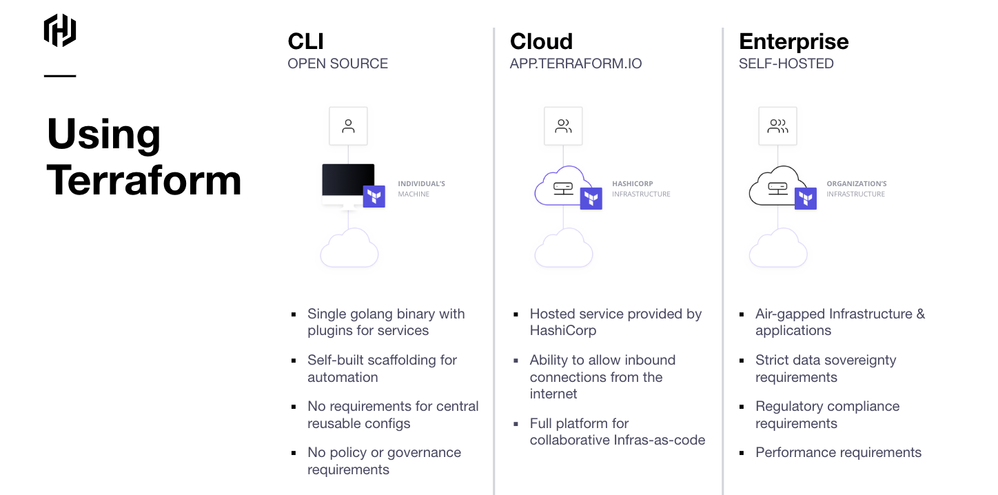

There are three editions of Terraform depending on your needs: open source, Terraform Cloud, and Terraform Enterprise.

- Open source (Terraform CLI): Free to use and the best option for small teams and projects.

- Terraform Cloud: Paid subscription service with additional features and functionality, including centralized management, collaboration, and compliance.

- Terraform Enterprise: Paid subscription service designed for large enterprises that need a highly scalable and secure infrastructure as code solution.

See the event recording, beginning at 8:28 for additional details and considerations between each Terraform edition option.

Why Terraform?

There are many reasons why you and your organization can benefit from using Terraform, but we’ve summarized the primary use cases below.

- Provisioning and automation: Standardize your operations with a common, collaborative workflow to provision, secure, govern, and audit any infrastructure. You can use the same workflow to manage multiple cloud providers and automation with CI/CD integrations, API access, and third-party services.

- Developer self-service: No-code provisioning lets platform teams manage a catalog of no-code modules for users like app developers to deploy without prior Terraform experience.

- Policy as code: Sentinel’s policy as code framework is embedded in the Terraform provisioning workflow to maintain consistent policy enforcement across all operations without manual reviews. By centralizing provisioning through a standardized infrastructure as code workflow, you can stay compliant, reduce the risk of misconfigurations, and save on cloud costs.

- Visibility and optimization: Gain instant visibility into the state of cloud infrastructure with health assessments. Drift Detection for Terraform preemptively detects when a resource has changed from the expected state, helping reduce security and operational risks. Customizable notifications alert operators when drift is detected or a health assessment fails.

Google Cloud’s commitment to Terraform

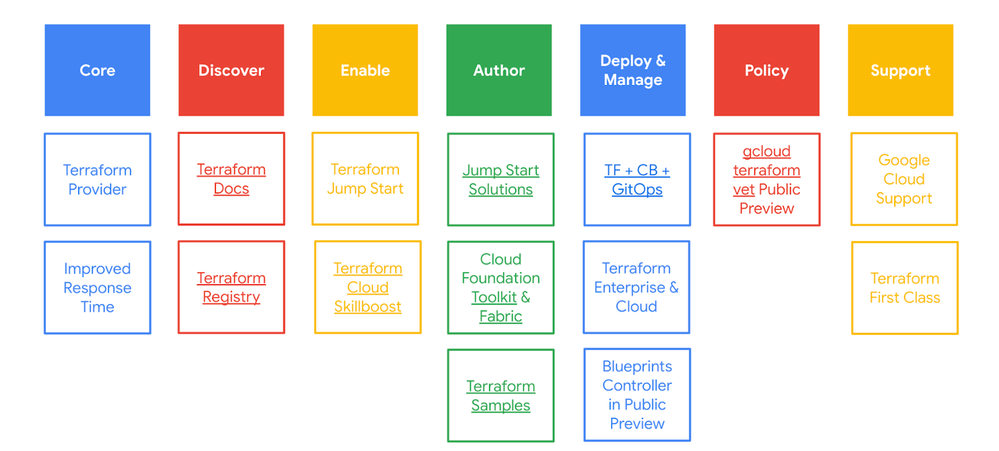

Google is committed to Terraform and is working to make it the best possible tool for managing infrastructure and operations.

- Core: We’re committed to improving the core functionality of the Terraform Provider for Google Cloud, including performance, reliability, and security.

- Discovery: We’re making it easier to discover and use Terraform resources - including documentation and tutorials and the Terraform Registry where users can easily find, share, and install Terraform modules.

- Enable: In addition to documentation and modules, Google is committed to providing enablement and training resources to help users learn and use Terraform, including hands-on labs and training courses, certification programs, support forums, and live Q&A events.

- Author: We’re making it easier for users to author Terraform code and configurations with Jump Start solutions (pre-built solution templates), Terraform resource samples, and Cloud Foundation Toolkit & Fabric.

- Deploy & manage: We’re building closer integrations between Terraform and Google Cloud in partnership with HashiCorp. In Q3, we are launching a native Terraform config and deployment management product offering - Blueprints Controller - so you can automatically apply and manage Terraform manifests in your environment. We also provide best practice guidance of building self-managed IaC config management using Cloud Build.

- Policy: Google is providing Terraform support for policy-based management of infrastructure to more easily enforce security, compliance and other custom policies, including integration with Google Cloud's IAM and Cloud Audit Logging services.

- Support: We’re committed to providing support for users of Terraform on Google Cloud with improved response times for bug fixes and feature requests.

Terraform on Google Cloud resource roundup

Terraform on Google Cloud FAQs

1. How to avoid a single point of failure with a state file used for the entire GCP project? And is there any way to build/provision the entire DR site from scratch on GCP when something happens to the live GCP region with Terraform?

Show More While it is easier to put everything in one state file per project, your apply time increases the more resources you add and it becomes a monolithic configuration. Break out dependencies into different states, with references to the project-level configuration. Most people divide into network and then components running on the network. This mitigates the overall blast radius of changes.

As for building / provisioning the entire DR site from scratch, use modules to make it easier to establish building blocks for an entire site. You could take the “easy” route and copy-paste every configuration from the original site but if you don’t parametrize everything, it will become a difficult task. Instead, parametrize region for the lowest-level resource (often networking) and output that for other resources to reference. This means you only need to make changes at the lowest-level set of resources and you can automate the sequence of provisioning for downstream dependencies.

While it is easier to put everything in one state file per project, your apply time increases the more resources you add and it becomes a monolithic configuration. Break out dependencies into different states, with references to the project-level configuration. Most people divide into network and then components running on the network. This mitigates the overall blast radius of changes.As for building / provisioning the entire DR site from scratch, use modules to make it easier to establish building blocks for an entire site. You could take the “easy” route and copy-paste every configuration from the original site but if you don’t parametrize everything, it will become a difficult task. Instead, parametrize region for the lowest-level resource (often networking) and output that for other resources to reference. This means you only need to make changes at the lowest-level set of resources and you can automate the sequence of provisioning for downstream dependencies.

2. What is the best non-HashiCorp CICD tool recommended by Hashicorp or Google for IAC pipelines with Terraform?

Show More There’s not a “best” one to recommend because it depends on your organization’s approach to infrastructure as code deployment and how you want to secure access to GCP. If you’re using a CI/CD framework that runs on GKE, for example, it makes it a lot easier to use access scopes to run Terraform with least privilege access to the services it is configuring. You’ll also want to look for one that offers pipelines as code or templating to standardize stages for planning, applying, configuration scanning, and testing.

There’s not a “best” one to recommend because it depends on your organization’s approach to infrastructure as code deployment and how you want to secure access to GCP. If you’re using a CI/CD framework that runs on GKE, for example, it makes it a lot easier to use access scopes to run Terraform with least privilege access to the services it is configuring. You’ll also want to look for one that offers pipelines as code or templating to standardize stages for planning, applying, configuration scanning, and testing.

3. What is the right way to implement Terraform on GCP from scratch? I see many people have different opinions but there should be a best practice for starting with Terraform from 0 for an Enterprise Customer.

Show More For enterprise customers getting started, we have a few options depending on your familiarity with Terraform and how much flexibility you would like to have in the process. We have the console UI-based onboarding tool, which is a great option for users getting started with Terraform and visually walks you through all the different steps for getting set up.

For more advanced users who are familiar with Terraform, we have Fabric, a landing zone template built with flexibility and modular components.

Finally for the most security-conscious users, we have the security foundations blueprint, which offers out-of-the box best practices. For enterprise customers getting started, we have a few options depending on your familiarity with Terraform and how much flexibility you would like to have in the process. We have the console UI-based onboarding tool, which is a great option for users getting started with Terraform and visually walks you through all the different steps for getting set up. For more advanced users who are familiar with Terraform, we have Fabric, a landing zone template built with flexibility and modular components. Finally for the most security-conscious users, we have the security foundations blueprint, which offers out-of-the box best practices.

4. What is HashiCorp's strategy regarding CDKTF, specifically on Google Cloud? Can you share the roadmap and any suggestions for Google Cloud customers?

Show More Cloud Development Kit for Terraform (CDKTF) offers Google Cloud as a pre-built provider and tracks the latest version of 4.0 as of this event. We do not update the pre-built provider for major versions immediately just in case we have breaking changes. We don’t have any updates on the CDKTF roadmap specific to Google Cloud, but much of the work is focused on the core of CDKTF and continuing to grow support across programming languages.

Cloud Development Kit for Terraform (CDKTF) offers Google Cloud as a pre-built provider and tracks the latest version of 4.0 as of this event. We do not update the pre-built provider for major versions immediately just in case we have breaking changes. We don’t have any updates on the CDKTF roadmap specific to Google Cloud, but much of the work is focused on the core of CDKTF and continuing to grow support across programming languages.

5. HashiCorp products are in use in Enterprise companies and in the Finance industry. How did you achieve to make Terraform as an industry standard when it comes to automation?

Show More While Terraform is widely adopted in the Financial Services sector, it was an organic effect of the Terraform’s growth strategy - a simple flywheel model.

1) Win the practitioner, where there are now over 2 million installs of the tool daily, the launch of the CDKTF and developers.hashicorp.com

2) Grow the ecosystem - there are now 3000+ providers and that number is growing, along with community certifications

3) Enable the customer. HashiCorp focuses a lot of enablement on the enterprise features that are required for auditability and regulatory compliance.

While Terraform is widely adopted in the Financial Services sector, it was an organic effect of the Terraform’s growth strategy - a simple flywheel model. 1) Win the practitioner, where there are now over 2 million installs of the tool daily, the launch of the CDKTF and developers.hashicorp.com 2) Grow the ecosystem - there are now 3000+ providers and that number is growing, along with community certifications3) Enable the customer. HashiCorp focuses a lot of enablement on the enterprise features that are required for auditability and regulatory compliance.

6. Google Cloud has a solution for IaC called "Deployment Manager". Because of multicloud capability, Terraform is mostly being chosen by the users. Terraform is killing the Deployment Manager usage. Maybe it would cause a sunset of the Deployment Manager. What do you think about it?

Show More

Google Cloud's Deployment Manager (DM) is still a supported solution for managing infrastructure on GCP. However, many of our customers prefer Terraform due to its multi-cloud capabilities and provider ecosystem. As a result, we recommend Terraform to our customers and we're also working on a Maturity Model that will help customers choose the best option for them.

Regarding sunsetting of Deployment Manager, as of today, we will continue to support Deployment Manager for some time because we have many customers who still rely on it and all of our Marketplace deployments are deployed via DM.

Deployment Manager is still a good choice for some users who want a simple and easy-to-use tool for managing infrastructure on GCP. However, Terraform is the de facto standard to provision infrastructure today and we intend to support our users and make it a first class experience on GCP.

We’re discussing the future of DM internally and will meet our customers to better understand their needs before making a decision. One possible scenario is that DM may support Terraform deployments through enhancements or we could potentially replace it with another solution. It's too early to say and I think what's more important is that Google is going all-in on Terraform and we’re meeting customers where they are.

Google Cloud's Deployment Manager (DM) is still a supported solution for managing infrastructure on GCP. However, many of our customers prefer Terraform due to its multi-cloud capabilities and provider ecosystem. As a result, we recommend Terraform to our customers and we're also working on a Maturity Model that will help customers choose the best option for them. Regarding sunsetting of Deployment Manager, as of today, we will continue to support Deployment Manager for some time because we have many customers who still rely on it and all of our Marketplace deployments are deployed via DM. Deployment Manager is still a good choice for some users who want a simple and easy-to-use tool for managing infrastructure on GCP. However, Terraform is the de facto standard to provision infrastructure today and we intend to support our users and make it a first class experience on GCP. We’re discussing the future of DM internally and will meet our customers to better understand their needs before making a decision. One possible scenario is that DM may support Terraform deployments through enhancements or we could potentially replace it with another solution. It's too early to say and I think what's more important is that Google is going all-in on Terraform and we’re meeting customers where they are.

7. How to 'Enable API' of the GCP service/resource using terraform for the first time? Can you give descriptive code for it or direct a source where to find it? How to use the public registry of terraform for GCP as modules and just make changes to tfvars?

Show More

Use https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/google_project_servic.... Set a list of services you want enabled for the project and use count or for_each to loop over and enable them. You’ll want to use a list so it’s easier to review your IaC and check what services you have enabled across different projects. There is no direct way to pass variables from one configuration into a module. What I do recommend is naming the variables the same as the module’s variables and setting as many opinionated defaults as possible. That way, you only need to specify the absolute necessary ones in tfvars.

We also have a project_services module which automates looping over the list and provides some additional functionality like creating service identities associated with an API.

Use https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/google_project_service. Set a list of services you want enabled for the project and use count or for_each to loop over and enable them. You’ll want to use a list so it’s easier to review your IaC and check what services you have enabled across different projects. There is no direct way to pass variables from one configuration into a module. What I do recommend is naming the variables the same as the module’s variables and setting as many opinionated defaults as possible. That way, you only need to specify the absolute necessary ones in tfvars.

We also have a project_services module which automates looping over the list and provides some additional functionality like creating service identities associated with an API.

8. Is there any plan to review GCP provider resources attributes consistent names? Today may change per provider, like network for some resources can reference VPC and other resources ip address ranges.

Show More This is not planned at the moment. Most inconsistencies of this kind are present in the GCP APIs and Terraform is consistent with those.

This is not planned at the moment. Most inconsistencies of this kind are present in the GCP APIs and Terraform is consistent with those.

9. Is there a possibility of adding/removing/updating annotations to Secret Manager secrets via Terraform? Is this feature going to be included in the roadmap soon?

Show More As part of our program to have service teams support Terraform requests, this should be resolved by them if there is a strong need/urgency. We’re still onboarding teams to the program, so there is no guarantee that we’ll support this soon, however, you should reach out to your account team who can help escalate this on their end.

As part of our program to have service teams support Terraform requests, this should be resolved by them if there is a strong need/urgency. We’re still onboarding teams to the program, so there is no guarantee that we’ll support this soon, however, you should reach out to your account team who can help escalate this on their end.

10. Why would you use Terraform on Google Cloud? How to use it? Could you do a migration example?

Show More

There are many reasons why you’d use Terraform:

IaC: HCL is a declarative language. You ask for what you want and Terraform just figures the “how”. It’s code, deployments documented, Version Control, rollback, approvals, etc.

Multi-cloud: A single workflow to create resources on any cloud. Only need to change the code. Ex: VM in Azure, VM in GCP - same workflow, parameters are different.

Well supported: Terraform expected to be around for a long time. Terraform is a widely adopted solution and Google is committed to supporting API coverage in Terraform, which will get even better over time.

How to use Terraform? You can choose to use Terraform OSS (CLI), Terraform Enterprise (CLI, API, UI), or Terraform Cloud depending on your needs.

Migration example:

If you're migrating from other providers, you have to replicate your deployments into GCP. It could be a manual process, but you can automate with reusable Modules.

If you're wanting to manage resources already deployed onto GCP with Terraform, then you can export your deployments from GCP into Terraform manually or using one of our tools called bulk-export (it doesn’t support all resources today), you then import with the Terraform Import command.

There are many reasons why you’d use Terraform:IaC: HCL is a declarative language. You ask for what you want and Terraform just figures the “how”. It’s code, deployments documented, Version Control, rollback, approvals, etc.Multi-cloud: A single workflow to create resources on any cloud. Only need to change the code. Ex: VM in Azure, VM in GCP - same workflow, parameters are different.Well supported: Terraform expected to be around for a long time. Terraform is a widely adopted solution and Google is committed to supporting API coverage in Terraform, which will get even better over time.

How to use Terraform? You can choose to use Terraform OSS (CLI), Terraform Enterprise (CLI, API, UI), or Terraform Cloud depending on your needs.Migration example: If you're migrating from other providers, you have to replicate your deployments into GCP. It could be a manual process, but you can automate with reusable Modules. If you're wanting to manage resources already deployed onto GCP with Terraform, then you can export your deployments from GCP into Terraform manually or using one of our tools called bulk-export (it doesn’t support all resources today), you then import with the Terraform Import command.

11. Best security practices when using Terraform for Google Cloud?

Show More We have a security section on our Terraform best practices we recommend for all customers. Some key highlights are to always use remote state (GCS, TFC etc), protecting the state file with correct IAM and ensuring that the identity used by Terraform only has the relevant roles needed, reducing blast radius. We have a security section on our Terraform best practices we recommend for all customers. Some key highlights are to always use remote state (GCS, TFC etc), protecting the state file with correct IAM and ensuring that the identity used by Terraform only has the relevant roles needed, reducing blast radius.

12. How to use dynamic block in GCP?

Show More Dynamic blocks are used at the resource level to allow iteration of attributes. In the case of GCP, you might use a dynamic block with a google_compute_firewall resource. You have an arbitrary number of “allow” rules. Rather than explicitly hard-configure each rule, you could pass in a map of rules to a dynamic “allow” block and iterate over the map and its attributes. Refer to https://developer.hashicorp.com/terraform/language/expressions/dynamic-blocks. Dynamic blocks are used at the resource level to allow iteration of attributes. In the case of GCP, you might use a dynamic block with a google_compute_firewall resource. You have an arbitrary number of “allow” rules. Rather than explicitly hard-configure each rule, you could pass in a map of rules to a dynamic “allow” block and iterate over the map and its attributes. Refer to https://developer.hashicorp.com/terraform/language/expressions/dynamic-blocks.

13. What is the best practice for developing, debugging, and troubleshooting a terraform script? Often a terraform script will plan successfully and then fail to apply. What are good practices to avoid these situations? What are best practices to test your deployment afterwards?

Show More Most apply time failures tend to be API errors. These could be due to various issues like API server side validation, IAM permissions or some other internal errors. Currently the only way to test this would be to apply the config on a test environment creating real resources. Our best practices for testing Terraform lay out some possible tools for performing this kind of integration testing including the blueprints test framework which we maintain.The GCP provider is also continually improving and shifting validation left so it’s easier to catch a subset of these errors during tf validate.

Learn additional details in the event recording starting at 51:15. Most apply time failures tend to be API errors. These could be due to various issues like API server side validation, IAM permissions or some other internal errors. Currently the only way to test this would be to apply the config on a test environment creating real resources. Our best practices for testing Terraform lay out some possible tools for performing this kind of integration testing including the blueprints test framework which we maintain.The GCP provider is also continually improving and shifting validation left so it’s easier to catch a subset of these errors during tf validate.Learn additional details in the event recording starting at 51:15.

14. Can Terraform be used in any BI tool? or how might data engineering benefit from it?

Show More Terraform can be used with BI tools in a few different ways. You can configure various tools with a Terraform provider, as long as one is available. It’s an easy way to set up different dashboards and other configurations as code and reproduce everything quickly. You can also retrieve data from Terraform state, which reflects a source of truth for any configuration or inventory. It’s JSON metadata that you can import and use in your tools to understand the configuration and state of assets. With respect to data engineering, Terraform is often used to set up data pipelines or jobs. For less dynamic environments, it’s useful for expressing relationships between components required for ingestion or consumption.

Terraform can be used with BI tools in a few different ways. You can configure various tools with a Terraform provider, as long as one is available. It’s an easy way to set up different dashboards and other configurations as code and reproduce everything quickly. You can also retrieve data from Terraform state, which reflects a source of truth for any configuration or inventory. It’s JSON metadata that you can import and use in your tools to understand the configuration and state of assets. With respect to data engineering, Terraform is often used to set up data pipelines or jobs. For less dynamic environments, it’s useful for expressing relationships between components required for ingestion or consumption.

15. Please explain the architecture of provisioning Terraform with different cloud providers.

Show More Terraform does not offer one data model for all clouds, so you’ll need to reference the provider for a different cloud. In general, avoid putting different cloud resources in the same module or configuration unless they are configured together (VPN or other cross-cloud services). You’ll also want to separate the modules, it makes it easier to test them independently.

I recommend separating each cloud provider into its own state. You want to be able to evolve resources in each provider independent of each other. If you have interactions between providers, those should go in their own state as well to be decoupled from individual resources (e.g., VPN connections between clouds). I also recommend breaking out modules for different providers as well. Some people do like to put multiple cloud providers into one module and set a toggle between them, but it makes it harder to test the module.

Terraform does not offer one data model for all clouds, so you’ll need to reference the provider for a different cloud. In general, avoid putting different cloud resources in the same module or configuration unless they are configured together (VPN or other cross-cloud services). You’ll also want to separate the modules, it makes it easier to test them independently.I recommend separating each cloud provider into its own state. You want to be able to evolve resources in each provider independent of each other. If you have interactions between providers, those should go in their own state as well to be decoupled from individual resources (e.g., VPN connections between clouds). I also recommend breaking out modules for different providers as well. Some people do like to put multiple cloud providers into one module and set a toggle between them, but it makes it harder to test the module.

16. How do you create a VM with optional additional disk, which should get attached if created?

Show More You can add additional disks to a VM by creating them in advance and specifying them as a “source” in the “attached_disk” block on google_compute_instance. Alternatively, you can use the google_compute_disk resource to create a disk and a google_compute_attached_disk to attach it to your instance.

You can add additional disks to a VM by creating them in advance and specifying them as a “source” in the “attached_disk” block on google_compute_instance. Alternatively, you can use the google_compute_disk resource to create a disk and a google_compute_attached_disk to attach it to your instance.

17. How do you create a service account and upload key directly as env variable?

Show More Ideally we would not need to create a service account key at all. If you are using Terraform within a GCP compute environment (GCE, GKE, Cloud Build etc.), grant the permissions to the machine identity service account associated with that environment.If you are on a local machine, you can use gcloud and application default credentials which is picked up by TF. If you are using TFC using a remote gcp agent or dynamic credentials that Ray mentioned earlier using workload identity federation is recommended.

Ideally we would not need to create a service account key at all. If you are using Terraform within a GCP compute environment (GCE, GKE, Cloud Build etc.), grant the permissions to the machine identity service account associated with that environment.If you are on a local machine, you can use gcloud and application default credentials which is picked up by TF. If you are using TFC using a remote gcp agent or dynamic credentials that Ray mentioned earlier using workload identity federation is recommended.

18. Can we create 1 module for all types of GKE clusters?

Show More It is potentially possible to create a single module for all GKE clusters but the end user experience becomes more complicated. There are different types of clusters like public, private, autopilot etc which cannot be changed after creating unless you delete and recreate. Additionally there are certain attributes that only apply to one kind that we will need to expose as a variable if we had a single common module which could be confusing. For these reasons, we publish some of these variant modules separately.

It is potentially possible to create a single module for all GKE clusters but the end user experience becomes more complicated. There are different types of clusters like public, private, autopilot etc which cannot be changed after creating unless you delete and recreate. Additionally there are certain attributes that only apply to one kind that we will need to expose as a variable if we had a single common module which could be confusing. For these reasons, we publish some of these variant modules separately.

19. What is the difference between Terraform's "plan" and "apply" commands, and how do they relate to the infrastructure-as-code (IaC) paradigm?

Show More Plan evaluates your configuration and identifies changes to be made to resources. Technically, plan issues read-only requests to your provider’s API as a way to compare your desired state versus the actual. The apply command implements the changes to be made to resources using a series of create, read, update, and delete commands to the provider’s API. More formally, you can consider a plan as a form of integration testing. Apply runs the automation to change resources in an imperative, idempotent manner and prioritizes immutability in order to maintain consistency and stability in automation.

Plan evaluates your configuration and identifies changes to be made to resources. Technically, plan issues read-only requests to your provider’s API as a way to compare your desired state versus the actual. The apply command implements the changes to be made to resources using a series of create, read, update, and delete commands to the provider’s API. More formally, you can consider a plan as a form of integration testing. Apply runs the automation to change resources in an imperative, idempotent manner and prioritizes immutability in order to maintain consistency and stability in automation.

20. Can we use the Terraform count meta-argument to conditionally create resources based on a variable value, even if that variable is not known until runtime?

Show More Count requires a known value before remote operations, which means that the variable must be defined before running an apply. Therefore, you can pass a variable to count but you cannot pass interpolated resource attributes.

Count requires a known value before remote operations, which means that the variable must be defined before running an apply. Therefore, you can pass a variable to count but you cannot pass interpolated resource attributes.

21. What is the ideal way to structure a terraform repo/deployment for multi-tenant, hybrid architectures?

Show More Keep different providers in separate state, when possible. This makes it easier to change resources in isolation and mitigate the blast radius of changes to one tenant. Even better is if you separate each tenant into its own state. Shared resources should go into its own repository and state as well, with each tenant referencing outputs of shared resources. A good example of this might be with Kubernetes. You would create the network and Kubernetes cluster in one repository in its own state. Any resources deployed to Kubernetes would reference the Kubernetes cluster information from that state or a configuration manager. It helps to invert your dependencies with a layer of abstraction like Kubernetes or Terraform outputs, and use that to call upon metadata you need for downstream dependencies. If you have multiple environments, you can subdivide environments by folder in a repository and reference separate state in each folder or you can break them out into different repositories, although it depends on your access control and production requirements.

Keep different providers in separate state, when possible. This makes it easier to change resources in isolation and mitigate the blast radius of changes to one tenant. Even better is if you separate each tenant into its own state. Shared resources should go into its own repository and state as well, with each tenant referencing outputs of shared resources. A good example of this might be with Kubernetes. You would create the network and Kubernetes cluster in one repository in its own state. Any resources deployed to Kubernetes would reference the Kubernetes cluster information from that state or a configuration manager. It helps to invert your dependencies with a layer of abstraction like Kubernetes or Terraform outputs, and use that to call upon metadata you need for downstream dependencies. If you have multiple environments, you can subdivide environments by folder in a repository and reference separate state in each folder or you can break them out into different repositories, although it depends on your access control and production requirements.

22. Is there any way to create a copy of an existing GCP project using Terraform and Google Cloud Storage?

Show More Google Cloud has a functionality built on gcloud that will let you automatically generate Terraform configurations for your project. See export your Google Cloud resources documentation for additional details. Google Cloud has a functionality built on gcloud that will let you automatically generate Terraform configurations for your project. See export your Google Cloud resources documentation for additional details.

23. How to approach Terraform destroy that fails in the middle?

Show More If you're really committed to destroying all those resources, you can run a Terraform destroy again and Terraform will go back and pick up from where it left off trying to destroy those resources and identify the ones that are still available. Sometimes the destroy still doesn't work because there are other dependencies that exist and the cloud provider’s API returns an error. You may have to manually go in and remove that resource as a break glass approach and then re-run Terraform to destroy again. If Terraform still gets stuck, consider using `terraform state rm` to take the resource out of Terraform and delete the resource manually in the cloud provider.

If you're really committed to destroying all those resources, you can run a Terraform destroy again and Terraform will go back and pick up from where it left off trying to destroy those resources and identify the ones that are still available. Sometimes the destroy still doesn't work because there are other dependencies that exist and the cloud provider’s API returns an error. You may have to manually go in and remove that resource as a break glass approach and then re-run Terraform to destroy again. If Terraform still gets stuck, consider using `terraform state rm` to take the resource out of Terraform and delete the resource manually in the cloud provider.

24. How much easier is it to implement security products such as "Cloud Armor" using Terraform? Is it better to use Terraform for such scenarios or is Google Console a better option for it?

Show More Configuring products with a complex set of parameters like Cloud Armor could be easier via Terraform if you are familiar with the tool. It lets you see all the parameters in a single file so it's easier to review and if you use Git, you see how the policy changed over time. You could also build more streamlined interfaces using modules. We maintain a Cloud Armor module which you might find useful. Configuring products with a complex set of parameters like Cloud Armor could be easier via Terraform if you are familiar with the tool. It lets you see all the parameters in a single file so it's easier to review and if you use Git, you see how the policy changed over time. You could also build more streamlined interfaces using modules. We maintain a Cloud Armor module which you might find useful.

25. Is there a high level blueprint language wrapper that can generate Terraform for you?

Show More HashiCorp Configuration Language (HCL), the default Terraform language *is* a high-level language. If you prefer, you may also use JSON. Additionally, the CDKTF allows you to define your infrastructure in most popular programming languages.

HashiCorp Configuration Language (HCL), the default Terraform language *is* a high-level language. If you prefer, you may also use JSON. Additionally, the CDKTF allows you to define your infrastructure in most popular programming languages.

26. Is there a rollback feature in terraform for any accidental "destroy" scenarios? (apart from re-executing the code)?

Show More There is not a rollback feature in Terraform, as destroy permanently removes the resources. Changes in Terraform rely on a roll forward approach. If you are concerned about accidentally deleting important resources, check if the resource has an attribute to prevent deletion or use policy as code to check the plan for an action to destroy.

There is not a rollback feature in Terraform, as destroy permanently removes the resources. Changes in Terraform rely on a roll forward approach. If you are concerned about accidentally deleting important resources, check if the resource has an attribute to prevent deletion or use policy as code to check the plan for an action to destroy.

27. Is there any good Terraform visualization tool for inspecting dependencies?

Show More

Terraform has a ‘graph’ command that is used to generate a visual representation of either a configuration or execution plan. You can read more about it at https://developer.hashicorp.com/terraform/cli/commands/graph.

I also recommend https://github.com/im2nguyen/rover. It is a great visualization tool for Terraform dependencies.

Terraform has a ‘graph’ command that is used to generate a visual representation of either a configuration or execution plan. You can read more about it at https://developer.hashicorp.com/terraform/cli/commands/graph.

I also recommend https://github.com/im2nguyen/rover. It is a great visualization tool for Terraform dependencies.

If you have any questions that weren’t addressed, please leave a comment below and someone from the Community or Google Cloud and Terraform teams will be happy to help.

Have feedback or suggestions about using Terraform on Google Cloud? We’d love to hear from you! Please leave a comment below.

Special thank you to the HashiCorp Terraform team, Ray Ploski, Rosemary Wang, Krysta Rose, Mina Kwan, Tricia Apperson, and the Google Cloud team, including Arslan Saeed and Bharath Baiju for their partnership and contributions.