A standard for autonomous vehicle componentisation

The 44th MPAI General Assembly has published three Calls for Technologies. The Connected Autonomous Vehicle – Technologies (CAV-TEC) Call requests parties having rights to technologies satisfying the CAV-TEC Use Cases and Functional Requirements and the CAV-TEC Framework Licence to respond to the Call preferably using the CAV-TEC Template for Responses. An online presentation of this Call will be held on 2024/06/6 (Thursday) at 16 UTC. Please register, if you wish to attend the presentation (recommended if you intend to respond).

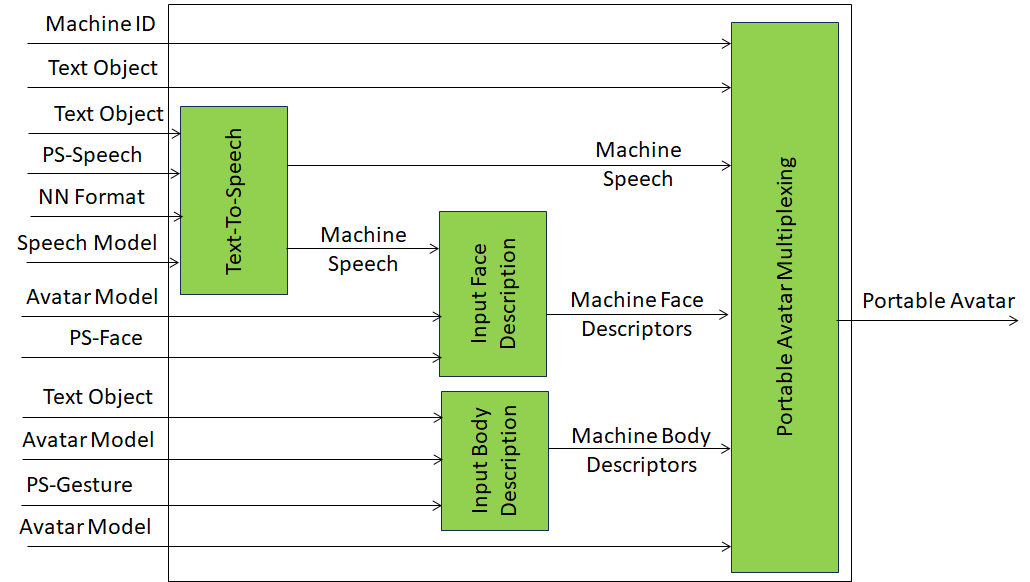

MPAI kicked off the Connected Autonomous Vehicle (MPAI-CAV)) project in the first days after its establishment. The project was particularly challenging and only in September 2023, MPAI was ready to publish Version 1.0 of Technical Specification: Connected Autonomous Vehicle (MPAI-CAV) – Architecture (CAV-ARC). This specified a CAV as a system composed of Subsystems for each of which functions, input/output data, and topology of components were specified. In its turn each subsystem was broken down into components of which functions and input/output data were specified. Each subsystem was assumed to be implemented as an AI Workflow (AIW) made of Components implemented as AI Modules (AIM) executed in an AI Framework (AIF) as specified by the AI Framework (MPAI-AIF) standard.

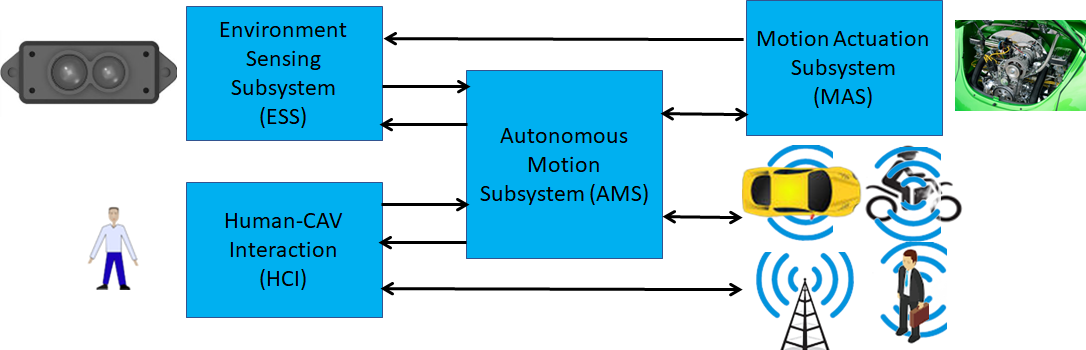

This is illustrated in Figure 1 where a human staying outside of a CAV interacts with it via the Human-CAV Interaction Subsystem (HCI) requesting the Autonomous Motion Subsystem (AMS) to take the human to a destination. The AMS requests spatial information from the Environment Sensing Subsystem (ESS), decides a route (possibly after consulting with the HCI and the human) and starts the travel. The spatial information provided by the ESS is used to create a model of the external environment and is possibly integrated with other environment models obtained from CAVs in range. Finally, the AMS has enough information to issue a command to the Motion Actuation Subsystem (MAS) to move the CAV to the desired place that can be close if the environment is “complex” or rather far if it is “simple”.

Figure 1 – The reference model of MPAI Connected Autonomous Vehicle

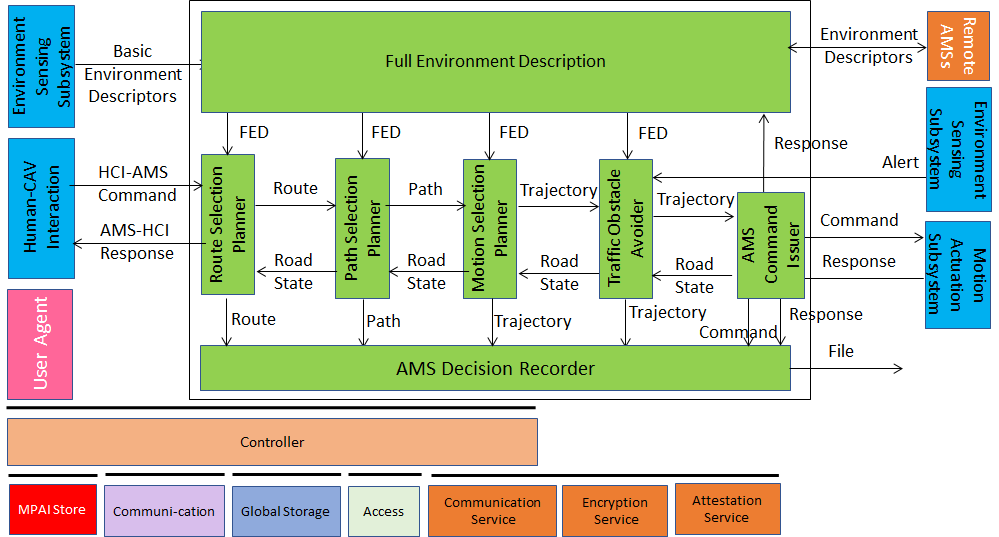

CAV-ARC is a functional specification in the sense that it identifies subsystems and components and their functions, but not the precise functions of the data exchanged. This can be seen in Figure 2 where, say, the Road State data type is identified and its functions generally described but without a full specification of functional requirements.

Figure 2 – The Autonomous Motion Subsystem.

The purpose of the CAV-TEC Call is to identify and characterise all the data types required by the CAV reference model and stimulate specific technology proposals.

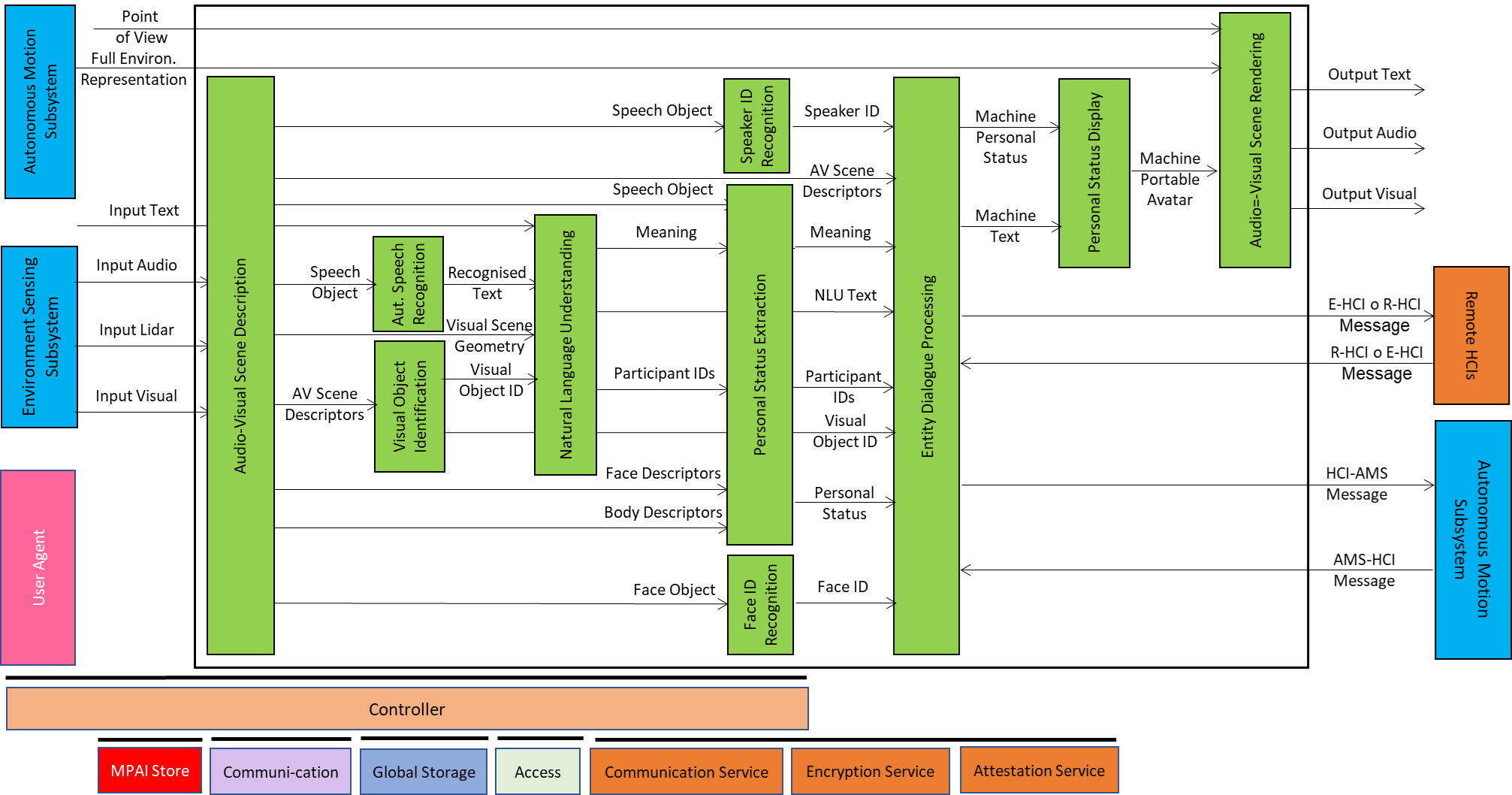

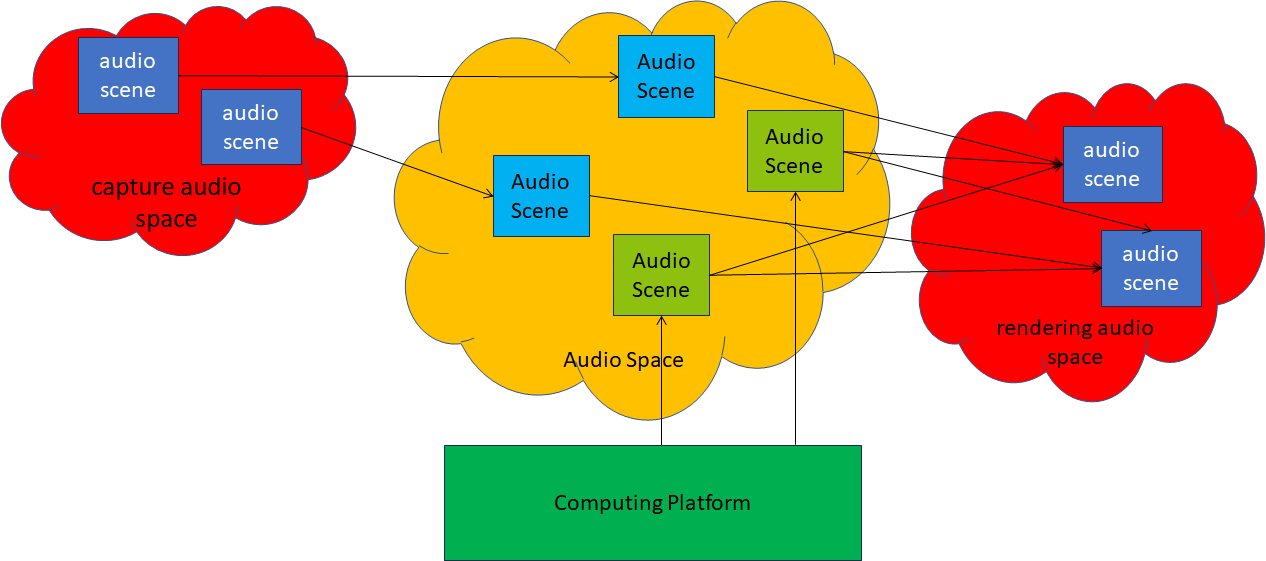

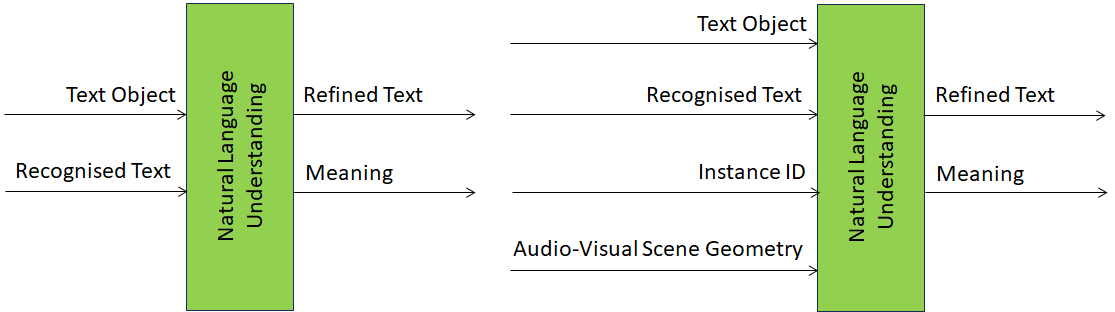

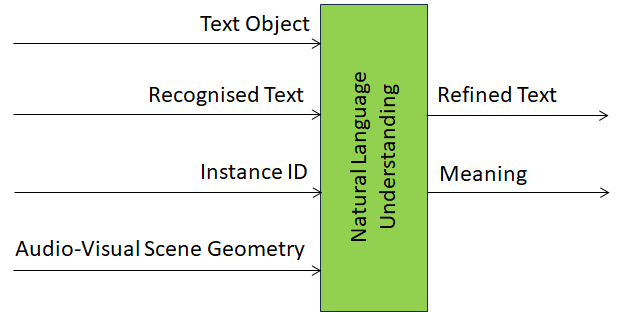

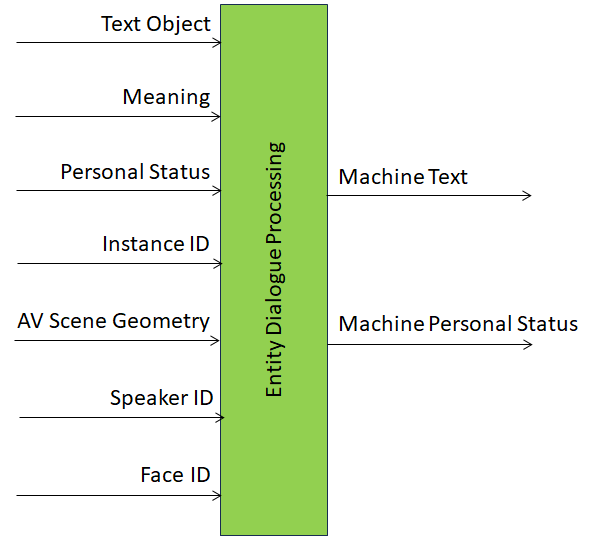

Because many components of the HCI are shared with other MPAI standards, in September 2023 MPAI has published Multimodal Conversation (MPAI-MMC) V2.0 that includes the HCI specification whose scope goes beyond the general CAV-ARC scope. Most of the CAV-ARC data types of the CAV-HCI reference model of Figure 3 are fully specified.

Figure 4 – The Human-CAV Interaction Subsystem

What is still missing – and is part of the Call – is the full specification of the messages exchanged by the HCI with the AMS and its peer HCIs in remote CAVs.

The Use Cases and Functional Requirements document attached to the Call contains an initial form of JSON syntax and semantics of all data types and requests comments on their appropriateness and proposals for data type formats and attributes.

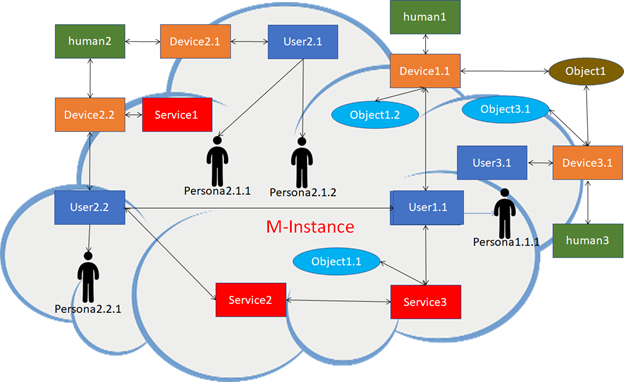

It is interesting to note that MPAI assumes that a CAV generates a “private” metaverse used to plan decisions to move the CAV in a real environment. A CAV may request – and the requested CAV may decide to share – part of their private metaverses to facilitate understanding of the common real space(s) they traverse. MPAI investigations have shown that a CAV’s private metaverse can be represented _and_ shared by the same or slightly extended MPAI-MMM metaverse technologies.

This observation has been put in practice and part of the Technical Specification: MPAI Metaverse Model (MPAI-CAV) – Architecture (MPAI-TEC) V1.1 is referenced by the MPAI-TEC Call. It should be noted that the parallel MMM-TEC Call for Technologies seeks to enhance the current MMM-ARC specification by providing an initial form of JSON syntax and semantics of all data types and requesting comments on their appropriateness and proposals for data type formats and attributes.

Bringing to reality the dream of autonomous vehicles will be a major contribution to improving our life and environment. Standards can greatly contribute to the conversion of CAVs from siloed systems to systems made of standard components that are more reliable, explainable, and affordable.